Remote visualization with DCV

CCME provides multiple ways of accessing remote graphical sessions to run you pre and post-processing

workloads.

Remote visualization is delivered by the DCV software.

All DCV sessions are made available through the ALB deployed as part of the Cluster Management Host stack.

The URL to reach them will be https://ALB_URL/dcv-INSTANCE_ID/#SESSION_ID, with:

ALB_URL: the DNS name of the ALB orCCME_DNSif you specify itINSTANCE_ID: the ID of the EC2 instance on which the session runsSESSION_ID: the DCV session ID

By default, only 1 session is allowed per user on each type of DCV sessions (see below: HeadNode, in a dcv* partition or a Windows instance).

If a user tries to create multiple sessions, he will get a prompt asking “Do you want to reconnect to the existing session?”.

This limitation can be changed by updating the corresponding EnginFrame service: in EnginFrame “Admin Portal”,

go to “Manage/Services” and edit the selected DCV service, click on “Settings” and change the “Max number of Sessions” parameter

(you can also change the “Session Class” if you wish for example to set the same value for all DCV sessions to allow only 1

DCV session across all the DCV services).

Note

If you modify one of the EnginFrame DCV services generated by CCME, then be aware that the next time you

run a pcluster update-cluster these services will be regenerated by CCME. You can safely publish

unmodified DCV services, but if you need to customize them, we recommend to first create a copy of the

services, and the customize and publish these copies.

Prerequisites

You need to check the limits of your AWS account to match your needs in terms of remote visualization. We list below the quotas you need to review and ensure that they are high enough to support your use cases:

EC2: ensure that you have enough vCPUs for the different types of instances you will use across all your clusters.

Elastic Load Balancing (ELB):

Rules per Application Load Balancer: if you intend to have a large cluster of remote visualization instances (both Linux and/or Windows), you need to ensure that this limit is above the total number of remote visualization instances you will allow on all your clusters. Reminder: only 1 session can be run on a Windows instance, but multiple can be run concurrently on a Linux instance; only the number of instances count for this limit.Target Groups per Application Load Balancer: same as above, as 1 target group is created per DCV instance.

Linux

Headnode

A service in EnginFrame is available to start a DCV session on the HeadNode.

Its name is DCV Linux - Headnode, by default it is not published to any user.

DCV session on the HeadNode is not managed by Slurm, but by DCV Session Manager.

Compute nodes

All instances placed in a Slurm partitions named dcv* will have DCV installed and available.

A service in EnginFrame automatically created to start a DCV session on the each dcv* queue.

The name of the services is DCV Linux - queue <queueName> (with queueName the name of the DCV queue), by default it is not published to any user.

Nodes are managed by Slurm and ParallelCluster as standard compute nodes. So instances are dynamically started/terminated depending on the needs of the users

Windows

Aside from Linux remote visualization nodes, CCME provides a way to dynamically manage Windows instances for remote visualization. In this case, the instances are note managed by Slurm and ParallelCluster, but directly by CCME through multiple mechanisms, but essentially a new EnginFrame plugin that manages the lifecycle of the Windows instances along with the requested remote sessions.

Prerequisites and configuration

The only prerequisite that is needed to setup a Windows DCV fleet, is to have an AMI prepared with the following requirements:

OS version: Windows Server 2019 or greater

Installed software

DCV server: configured to work properly on the target instance type (e.g., with or without GPU), and with DCV Session Manager.

DCV Session Manager Agent <https://docs.aws.amazon.com/dcv/latest/sm-admin/agent.html> (DCVSM Agent). Its final configuration will be done at startup by CCME through an EC2 User-Data script.

NVidia drivers: this is optional and depends on the target instance type

AWSPowerShell: tools to call AWS APIs. Note that these tools are installed by default on AWS standard Windows AMIs.

User authentication: your AMI must be configured to authenticate against your Active Directory, or use local users (not recommended)

Shared file systems: any shared file systems that needs to be mounted on the instance must be pre-configured in the AMI. CCME EC2 User-Data script currently do not handle any dynamic mountpoints.

You’ll then need to select an instance type compatible with the AMI you created.

To configure CCME, you’ll need to update the following variables in the ParallelCluster configuration file,

in the HeadNode.CustomActions.OnNodeStart.Args parameters:

Instance launch configuration:

CCME_WIN_AMI: the AMI ID.CCME_WIN_INSTANCE_TYPE: the instance type to use.CCME_WIN_TAGS(optional, default{}): dictionary of additional tags to apply on the instances of the Windows fleet (see Starting a Windows DCV session for the list of default tags). The format must be a valid “YAML dictionary embedded in a string”. Hence, the whole line must be enclosed in double quotes, and then the value ofCCME_WIN_TAGSmust be the dict enclosed in escaped double quotes. See the following example:"CCME_WIN_TAGS=\"{'MyTagKey1': 'MyTagValue1', 'MyTagKey2': 'MyTagValue2'}\"".CCME_WIN_CUSTOM_CONF_REBOOT(optional, defaultfalse): you need to set this totrueif your Powershell customization scriptwinec2_config.ps1reboots the instance.

Session launch monitoring from EnginFrame:

CCME_WIN_LAUNCH_TRIGGER_DELAY(optional, default10): Specify the delay (in seconds) between two rounds performed by an EnginFrame trigger to check if the instance has started and if the DCVSM agent on the instance has registered to the DCVSM broker on theHeadNode.CCME_WIN_LAUNCH_TRIGGER_MAX_ROUNDS(optional, default100): Maximum number of rounds allowed to check the instance startup (hence, the maximum delay for an instance to start and join DCVSM isCCME_WIN_LAUNCH_TRIGGER_DELAY * CCME_WIN_LAUNCH_TRIGGER_MAX_ROUNDS, by default10 * 100 = 1000seconds)

Session cleanup configuration:

CCME_WIN_INACTIVE_SESSION_TIME(optional, default600): the instance is terminated if no DCV session is active on the Windows instance since more thanCCME_WIN_INACTIVE_SESSION_TIMEseconds. If the value is0, this control is deactivated.CCME_WIN_NO_SESSION_TIME(optional, default600): the instance is terminated if no DCV session is up and running on the Windows instance and the instance has been started more thanCCME_WIN_NO_SESSION_TIMEseconds ago. If the value is0, this control is deactivated.CCME_WIN_NO_BROKER_COMMUNICATION_TIME(optional, default600): time in seconds after which the instance is terminated if the DCVSM broker on the headnode cannot be contacted from the Windows instance. If the value is0, this control is deactivated.

Note

If you need to have multiple configurations for your Windows interactive sessions in a single CCME cluster,

you can override the values of CCME_WIN_AMI, CCME_WIN_INSTANCE_TYPE, CCME_WIN_INACTIVE_SESSION_TIME,

CCME_WIN_NO_SESSION_TIME and CCME_WIN_NO_BROKER_COMMUNICATION_TIME that you have set in ParallelCluster

configuration by exporting these variables with the expected values in the action script of your EnginFrame services

(e.g., export CCME_WIN_INSTANCE_TYPE=g4dn.xlarge).

In this case, the variables set in ParallelCluster configuration file will be used as default values, and the variables set in EnginFrame services will be specific to the service.

You can thus create multiple DCV Windows services with different configurations. For example:

Allow long-lasting sessions by setting

CCME_WIN_INACTIVE_SESSION_TIMEto0.Propose multiple sizes of instances depending on the application/use-case your users have (e.g.,

g4dn.12xlargefor heavy 3D modeling,m6a.largefor 2D-only sessions…).

Note

If you need to update the AMI for your Windows instances (e.g., to applying a security update), you have two ways of doing it:

Simply export the

CCME_WIN_AMIin the DCV services in EnginFrame, by specifying the new AMI ID. You will need to exporte this variable for all Windows DCV services if you have multiple.Update the

CCME_WIN_AMIvariable in the ParallelCluster configuration file. For that, you will need to stop the compute fleet of your cluster, then update the cluster, and start again the compute fleet.

Windows session lifecycle

Starting a Windows DCV session

To create a Windows DCV session, you first need to publish the DCV Windows service that is automatically created

with in EnginFrame.

Just log-in as an administrator (e.g., efadmin), click on Switch to Admin View and then on Services,

select the DCV Windows service and Publish the service to the selected groups of users.

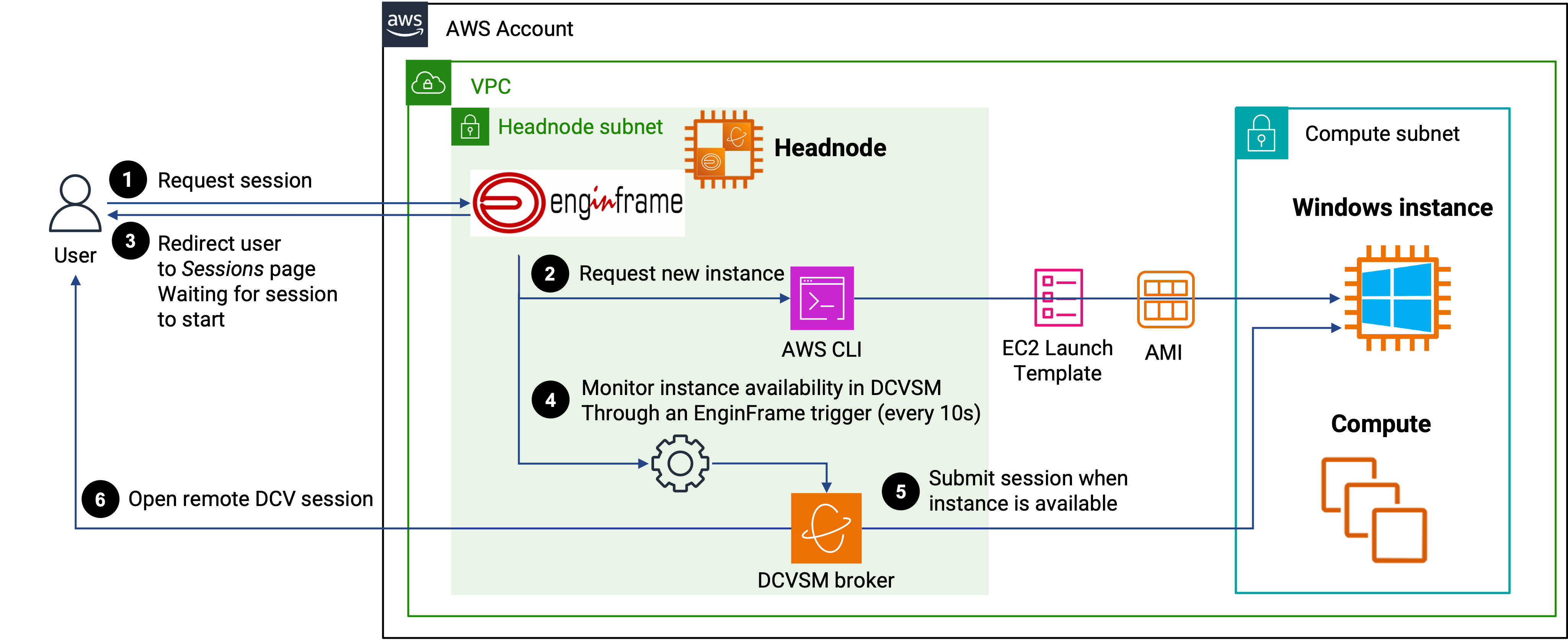

Whenever a user requests a new Windows session through the DCV Windows service created above, it will go through the following process:

A new EC2 instance is launched.

Each session is tied to a specific EC2 instance: it is started when requested and terminated when the session is closed (see Terminating a DCV session and instance cleanup to prevent overspendings for details about EC2 instance termination).

The instance is launched with a Launch Template created by the Management Host (see the

CCME_WIN_LAUNCH_TEMPLATE_IDparameter in the ParallelCluster configuration file inHeadNode.CustomActions.OnNodeStart.Args), it will be started in the same network as the compute nodes.The AMI ID used to launch the instance is the one specified by

CCME_WIN_AMI.The instance runs a specific EC2 user-data Powershell script during startup. This script is responsible for configuring the following items:

DCV: configuration of the

web-url-pathparameter to match the path used with the ALB, authentication with DCVSM Broker, disable automatic console session.DCVSM agent: configuration of the connectivity to DCSVM Broker on the HeadNode (IP, Certificate), tags (same tags as the EC2 instance, see Cluster termination).

A scheduled task to cleanup the instance in some cases (see Instance cleanup ScheduledTask).

Running a custom Powershell script if it exists: if you put a

winec2_config.ps1file inside thecustomdirectory of CCME in its S3 bucket, then this script will be executed during startup. You can use this script for example to mount additional file systems, add further configurations for Windows, or even join the instance to your Active Directory. Important: if this script reboots the instance, then you need to setCCME_WIN_CUSTOM_CONF_REBOOTtotrue.Sending a message to SNS to register the instance with the CCME ALB.

Restart DCV and DCVSM Agent services.

The instance is tagged with the following tags:

All tags present in the Tags section of the ParallelCluster configuration file and

parallelcluster:*tags created by ParallelCluster.ccme_EF_USER: the username of the user who requested the sessionccme_CLUSTERNAME: the name of the clusterccme_HEADNODE_IP: the IP address of the HeadNode of the clusterccme_interactive_sessionUri: URI internal to EnginFrame to identify the sessionccme_EF_SPOOLER_NAME: name of the Spooler attached to the session in Enginframeparallelcluster:node-type: this tag cannot be changed and its value is alwaysCCME_DCV. This allows the instances to be terminated along with the cluster.All tags present in

CCME_WIN_TAGS: tags describe in this variable supersede the ones present in the Tags section of the ParallelCluster configuration file.

The user is redirected to the

Sessionsview of EnginFrame, and will have to wait for the instance to start (this can take several minutes). The instance will automatically be configured to join the DCVSM cluster, and apply a set of tags to recognize it as belonging to the user and the requested DCV session. Once ready, the user will be redirected to the DCV session in his/her browser or download a DCV file containing the connection information.DCV network streams will go through the Application Load Balancer (ALB) deployed by the Management Stack, just like the Linux DCV sessions.

Limiting the number of sessions per user

EnginFrame provides a way to limit the number of sessions a user can launch at the same time.

Edit the DCV Windows service that you have created in EnginFrame (as an administrator, click on Switch to Admin View,

the Manage/Services and edit your service), click on Settings. There you’ll find two parameters that you can configure to limit

the number of sessions:

Session Class: a String used to identify the type of session you are running. You can simply set it towindowsfor example.Max number of Sessions: the number of concurrent sessions allowed to a user. If you leave it empty, users can launch as many sessions as they want, though they’ll be prompted to reuse an existing session if any. If you set it to1, then only 1 session will be allowed.

Terminating a DCV session and instance cleanup to prevent overspendings

CCME embeds a set of functions and safeguards to prevent overspendings and terminate all Windows instances that are no longer required. In this section, we present these various mechanisms.

Instance shutdown

All Windows instances are launched through a CCME EC2 lauch-template.

This launch template configures the InstanceInitiatedShutdownBehavior parameter of the instance to terminate.

This means that if a shutdown command is issued on the instance, instead of stopping it (and still pay for the attached storages),

the instance is terminated.

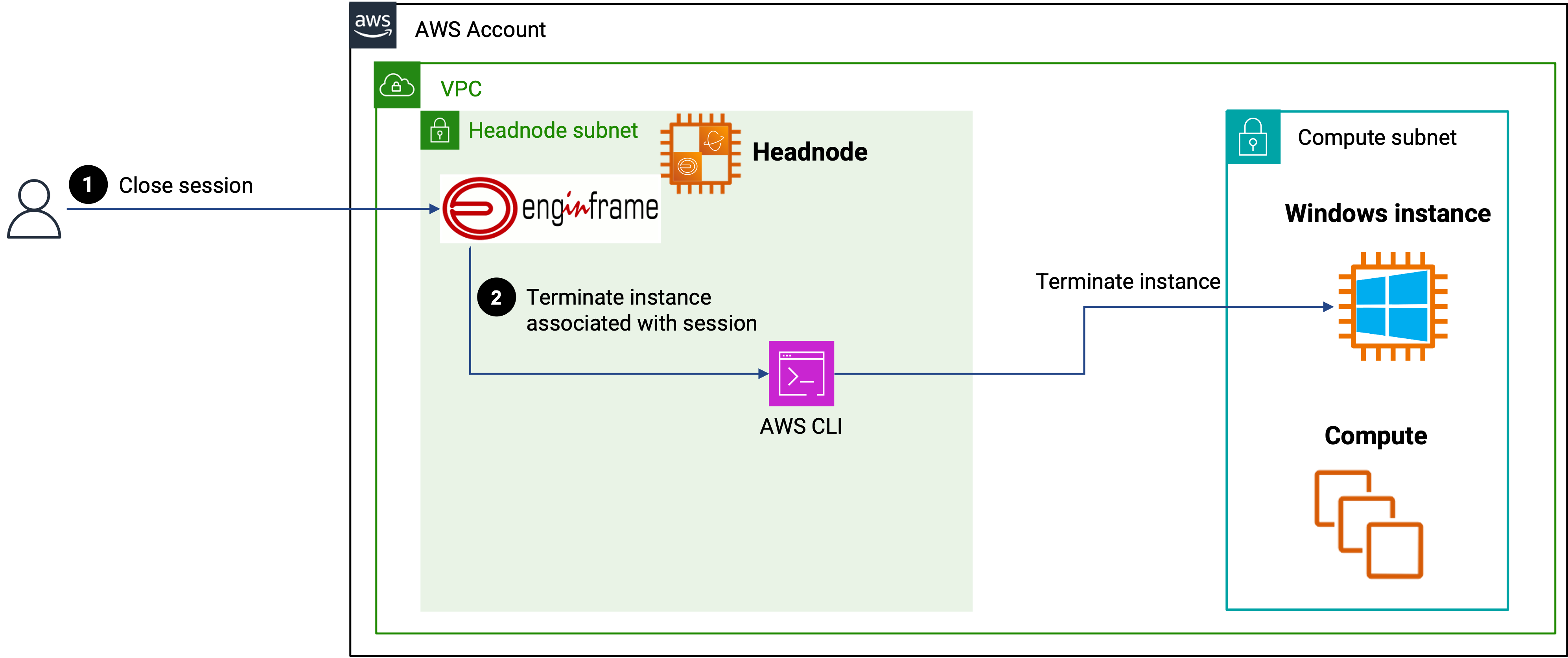

Closing the session from EnginFrame

The best and fastest way to cleanup a Windows instance is simply to close the session from EnginFrame. CCME EF plugin will terminate the instance associated with the session as soon as you request to close it.

Warning

Simply disconnecting from the DCV client or from Windows will not directly trigger the termination of the instance.

Though the instance might be terminated after CCME_WIN_INACTIVE_SESSION_TIME seconds (see Instance cleanup ScheduledTask).

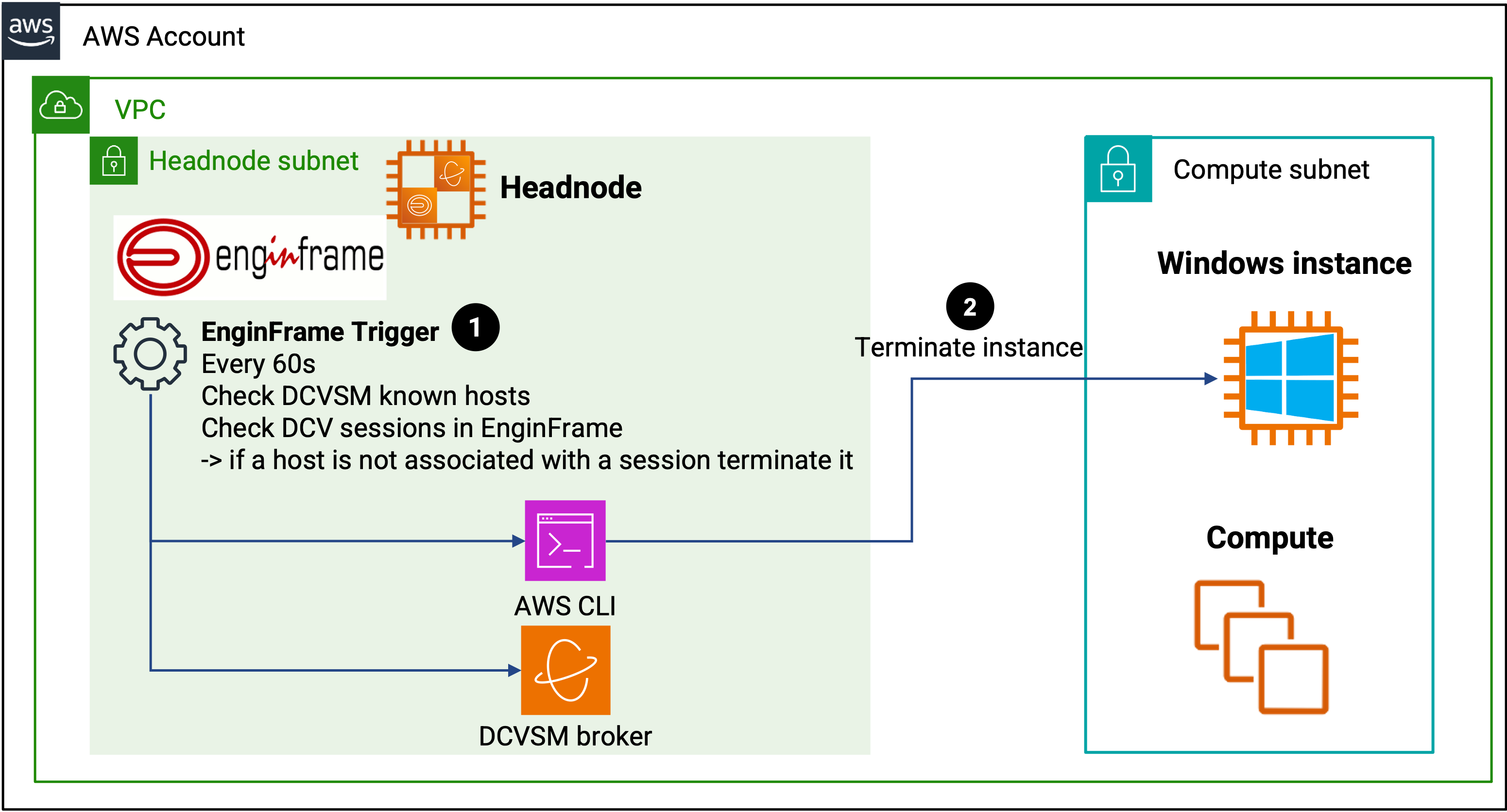

EnginFrame cleanup trigger

CCME EF plugin embeds an EnginFrame trigger that periodically (every 60 seconds) checks known DCVSM hosts and DCV sessions. If it detects that a host does not have a session anymore (e.g., if the session has been closed from the Windows session, not from EnginFrame), then the trigger will terminate such instances.

Instance cleanup ScheduledTask

All Windows instances are configured with a periodic ScheduledTask that terminates the instance if one of the following conditions are met:

No DCV session is active since more than

CCME_WIN_INACTIVE_SESSION_TIMEseconds.No DCV session is available and more than

CCME_WIN_NO_SESSION_TIMEseconds have passed since the instance has been started.If the DCVSM broker (on the headnode) cannot be contacted since more than

CCME_WIN_NO_BROKER_COMMUNICATION_TIMEseconds.

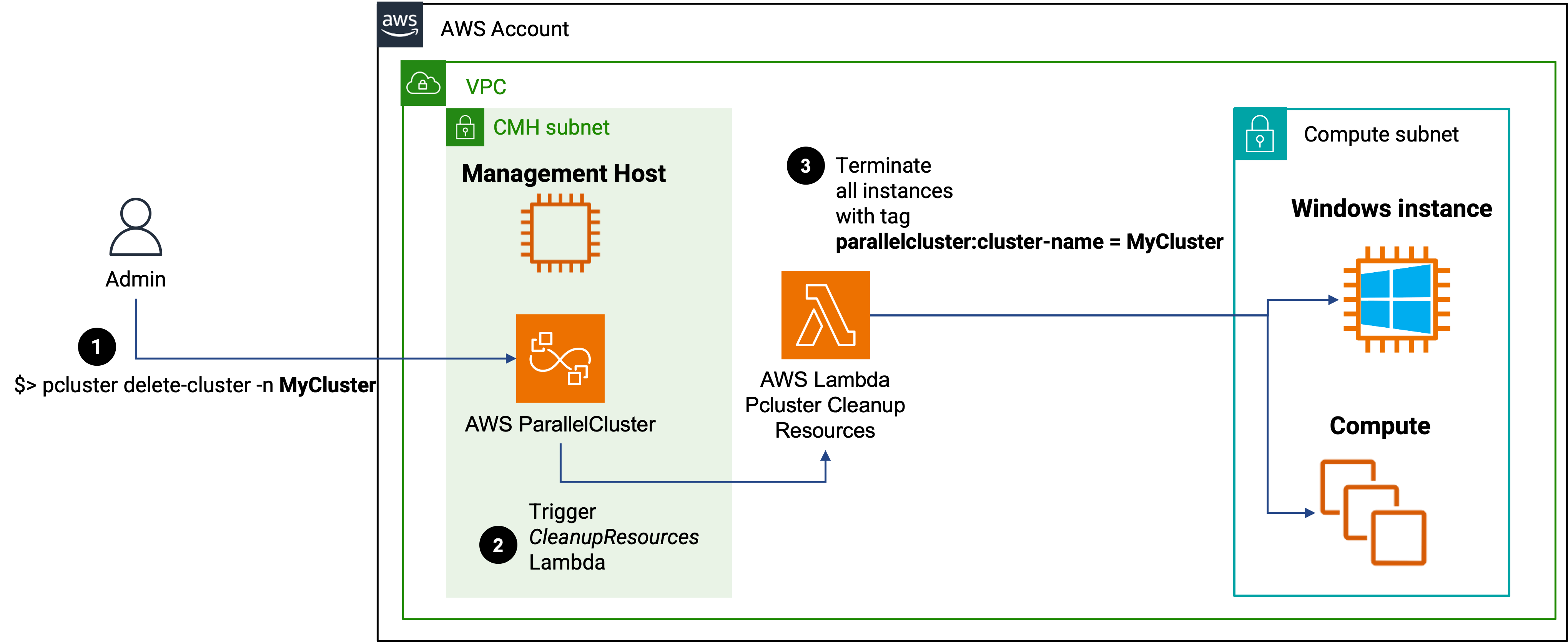

Cluster termination

All Windows instances launched as part of a CCME cluster inherit the parallelcluster:cluster-name tag from the ParallelCluster cluster,

and is also tagged with parallelcluster:node-type=CCME_DCV (the role associated with the AWS ParallelCluster Cleanup Lambda has been

updated to allow cleanup of instances with this tag).

Thus, when you terminate the cluster, all instances with the same parallelcluster:cluster-name tag as the cluster will be terminated

by ParallelCluster through its cleanup Lambda.